ZKP — Deep Dive Into powersoftau

I wonder if folks in the blockchain learning community have the similar experience as myself — your mind is blowing up everyday as you learn something new, and you need to catch up yourself and chew on all the new knowledge. Lately I got some time to look into powersoftau. For those of you with some background in Zero Knowledge Proof algorithms, you should already know that trusted setting is required before applying certain Zero Knowledge Proof algorithms. For example, Groth16 requires separate configurations to different circuits and generates CRS. PLONK, on the other hand, is an Univeral Zero Knowledge Proof algorithm. It only requires one setting to multiple circuits within a certain range. How to allow multiple participating agents securely set up trusted service, and generate Zero Knowledge Proof trusted parameter — this is where powersoftau comes in handy.

powersoftau utilizes MPC and randomized Beacon to complete the trusted setting.

Theoretical Background

The open source project powersoftau is based on below paper published in 2017:

Scalable Multi-party Computation for zk-SNARK Parameters in the Random Beacon Model

https://eprint.iacr.org/2017/1050

Player / Coordinator

The entire trusted setting has two components: Player and Coordinator. Coordinator sends the data by previous player to the next player. After all Players done with the calculation, Coordinator will then publish the random Beacon for another iteration of calculation to generate the final parameter.

Coordinator does not require trusted third party, since Coordinator can be verified by publishing the parameters generated by random Beacon. By publihsing random Beacon, it means that before certain timestamp we don’t know the random data, and after this timestamp, everyone can verify the random data.

Phase1/Pahse2

The second chapter in the paper gives an overall summary of the contract. Assume that Alice and Bob are two Players, the whole contract can be divided into two phases: Phase1 and Phase2.

Phase1 calculates the tau value in the given polynomial. Phase2 calculates the value for entire polunomial. Note that these values are bounded in a finite field. That is to say, Phase1 outputs the result of the MPC of a single term. Phase 2 outputs the result of the MPC of multiple terms combination. Phase1 has similar calculation process as Phase2 — step by step by each Player. There are two main functions mentioned in the contract: CONSISTENT and POK.

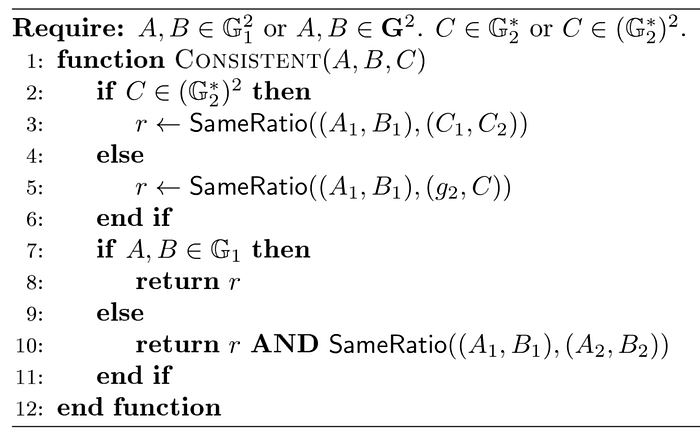

CONSISTENT

CONSISTENT is used to check whether two results of pairing functions are equal.

To check whether A, B, C satisfies e(A,B) = e(C1,C2), we note it this way: consistent(A-B; C).

POK

POK — proof of knowledge.

Parameter alpha represents the “knowledge”. In order to prove a given alpha, we calculate the result of r (the corresponding point of alpha on G1 and the public string v), and generate the point on G2. By providing corresponding point of alpha on G1, and alpha*r, we have proved that alpha as a known kowledge. This proof can be verified by pairing functions.

Contract Logic

The Chapter 4 of the paper illustrates the definition of the whole contract. Here, circuits are extracted into two structures: one structure that calculates exclusively circuits with multipliatioin and division only, and the other is the combination.

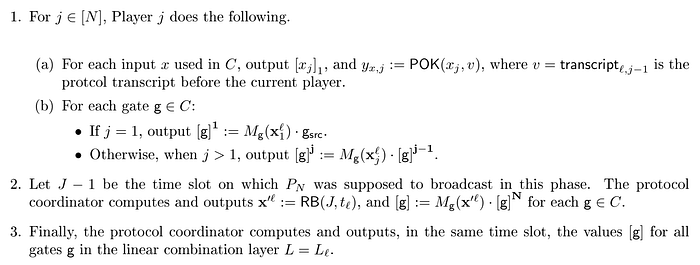

For a given circuit, the proof calculation follows below process:

1(a) — Apply POK proof to each input of the circuit. Note that v is generated by calculation on the previous layer.

1(b) — Calculate current Player result based on previous Player. Here, M indicates the polynomial of the circuit.

2 — Apply random Beacon

The proof process is rather clear, compared to the calculation process:

Verify the POK proof, and validate the calculation of M.

Chapter 6&7 has all the details on Groth16 algorithm parameter generating process. Feel free to check it out if you are interested.

Analyzing the Source Code

powersoftau has multiple projects on github and they have pretty simiar idea. Let’s take matter labs code as an example and analyze the code logic.

https://github.com/matter-labs/powersoftau

Thie project implements the trusted setting of Groth16 algorithm, which supports BN256 and BLS12_381 curves. Note that this project implements only Phase1, the combination part in Phase2 is not covered in the project.

Code Structure

accumulator.rs and batched_accumulator.rs. Conveying the idea of its name, accumulator “accumulates” multiple Players’ calculation result. There are multiple procedures under the bin directory, such as implementation calculation(including the Beacon application calculation), proof calculation, and so on. parameters.rs is the parameter config file. bn256/small_bn256/bls12_381 is the param configuration of the corresponding curve. Keypair.rs manages both the public and private keys. utils.rs implements some of the auxiliary functions. Let’s start with the keypair.

keypair

keypair implements the PublicKey and PrivateKey pair. PrivateKey is straight-forward — it contains tau, alpha, and beta:

pub struct PrivateKey<E: Engine> {

pub tau: E::Fr,

pub alpha: E::Fr,

pub beta: E::Fr

}PrivateKey is randomly generated. PublicKey is a little bit more complicated:

pub struct PublicKey<E: Engine> {

pub tau_g1: (E::G1Affine, E::G1Affine),

pub alpha_g1: (E::G1Affine, E::G1Affine),

pub beta_g1: (E::G1Affine, E::G1Affine),

pub tau_g2: E::G2Affine,

pub alpha_g2: E::G2Affine,

pub beta_g2: E::G2Affine

}Generate corresponding points of tau, alpha and beta on G1/G2. The three of the have similar calcation methods. Here is the detail on generating process of tau corresponded PublicKey:

let mut op = |x: E::Fr, personalization: u8| {

// Sample random g^s

let g1_s = E::G1::rand(rng).into_affine();

// Compute g^{s*x}

let g1_s_x = g1_s.mul(x).into_affine();

// Compute BLAKE2b(personalization | transcript | g^s | g^{s*x})

let h: generic_array::GenericArray<u8, U64> = {

let mut h = Blake2b::default();

h.input(&[personalization]);

h.input(digest);

h.input(g1_s.into_uncompressed().as_ref());

h.input(g1_s_x.into_uncompressed().as_ref());

h.result()

};

// Hash into G2 as g^{s'}

let g2_s: E::G2Affine = hash_to_g2::<E>(h.as_ref()).into_affine();

// Compute g^{s'*x}

let g2_s_x = g2_s.mul(x).into_affine();

((g1_s, g1_s_x), g2_s_x)

};g1_s is generated randomly on G. g1_s_x is xg1_s. g2_s is dependent on a digest data and the g1_s point. That is to say, before we know the points of g1_s and g1_s_x, and the related digest information, everyone can calculate this value. g2_s_x is xg_s。

The digest information is the has result of previous Player, and the details of calculation wiill be explained in the bin code. Since we already know g1_s, g1_s_x and digest, we can calculate g2_s. Therefore, PublicKey information ony requires these three points: ((g1_s, g1_s_x), g2_s_x).

accumulator

Accumulator is in charge of summing up all parameter information from all Players. The parameter information are clearly documented here:

pub struct Accumulator<E: Engine, P: PowersOfTauParameters> {

/// tau^0, tau^1, tau^2, ..., tau^{TAU_POWERS_G1_LENGTH - 1}

pub tau_powers_g1: Vec<E::G1Affine>,

/// tau^0, tau^1, tau^2, ..., tau^{TAU_POWERS_LENGTH - 1}

pub tau_powers_g2: Vec<E::G2Affine>,

/// alpha * tau^0, alpha * tau^1, alpha * tau^2, ..., alpha * tau^{TAU_POWERS_LENGTH - 1}

pub alpha_tau_powers_g1: Vec<E::G1Affine>,

/// beta * tau^0, beta * tau^1, beta * tau^2, ..., beta * tau^{TAU_POWERS_LENGTH - 1}

pub beta_tau_powers_g1: Vec<E::G1Affine>,

/// beta

pub beta_g2: E::G2Affine,

/// Keep parameters here

pub parameters: P

}Note that tau has different number of points on G1 nad G2. They are TAU_POWERS_G1_LENGTH and TAU_POWERS_LENGTH. The two macros are defined in parameters.rs:

const TAU_POWERS_LENGTH: usize = (1 << Self::REQUIRED_POWER)

const TAU_POWERS_G1_LENGTH: usize = (Self::TAU_POWERS_LENGTH << 1) - 1;Accumulator mainly provides two functions transform and verify_transform. transform function adds up PrivateKey based on current Accumulator:

pub fn transform(&mut self, key: &PrivateKey<E>)

{

...

batch_exp::<E, _>(&mut self.tau_powers_g1, &taupowers[0..], None);

batch_exp::<E, _>(&mut self.tau_powers_g2, &taupowers[0..P::TAU_POWERS_LENGTH], None);

batch_exp::<E, _>(&mut self.alpha_tau_powers_g1, &taupowers[0..P::TAU_POWERS_LENGTH], Some(&key.alpha));

batch_exp::<E, _>(&mut self.beta_tau_powers_g1, &taupowers[0..P::TAU_POWERS_LENGTH], Some(&key.beta));

self.beta_g2 = self.beta_g2.mul(key.beta).into_affine();

...

}Here, taupowers is the result of tau⁰, tau¹…tau^(TAU_POWERS_G1_LENGTH).

verify_transform verifies the result of transform function. It requires the pre-calculation Accumulator, post-calculation Accumulator, PublicKey and digest information. Take tau as an example, the logic can be explained as follow:

pub fn verify_transform<E: Engine, P: PowersOfTauParameters>(before: &Accumulator<E, P>, after: &Accumulator<E, P>, key: &PublicKey<E>, digest: &[u8]) -> boolBased on the g2_s result (which includes g1_s, g1_s_x and digest info), we first verify if the PubicKey information is correct.

if !same_ratio(key.tau_g1, (tau_g2_s, key.tau_g2)) {POK verification process is to verify public key information.

Then we check if tau⁰ equals to 1:

// Check the correctness of the generators for tau powers

if after.tau_powers_g1[0] != E::G1Affine::one() {

return false;

}

if after.tau_powers_g2[0] != E::G2Affine::one() {

return false;

}Validate the tau calculation:

if !same_ratio((before.tau_powers_g1[1], after.tau_powers_g1[1]), (tau_g2_s, key.tau_g2)) {Validate calculation on the other powers of tau:

if !same_ratio(power_pairs(&after.tau_powers_g1), (after.tau_powers_g2[0], after.tau_powers_g2[1])) {

return false;

}

if !same_ratio(power_pairs(&after.tau_powers_g2), (after.tau_powers_g1[0], after.tau_powers_g1[1])) {

return false;

}power_pairs is a pretty interesting design. To validate all the power calculations for the corresponding points, power_pairs take the points of corresponding power and multiply by a single random value, then accumulate these results dislocatively. One validation can garantee that multiple points follow the ascending power order.

bin

bin implements the whole trusted setting contract, including four operations:: 1/new (initialize Accumulator) 2/ compute (one iteration of parameter calculation) 3/ verify (verify the calculation results) 4/ beacon(generate randomized beacon parameter calculation).

We put emphasis on the compute and verify logic here, and other logic follows the similar fashion. These implementations locate at compute_constrainted.rs and verify_transform_constrainted.rs.

compute receives the challenge file, generate response file. verify takes the challenge file, and the last response to generate new_challenge.

The hash value of previous challenge becomes digest for next Player’s key pair. Certain Player generates parameters and his PublicKey become response, as well as the next Player’s challenge. Since the hash value of previous challege is going to be the verification of next PublicKey, the order of Players is set in this way.

Here is the entire flow of the contract:

new -> compute -> (verify) compute … -> (verify) compute -> (verify) beacon

Conclusion:

powersoftau utilizes MPC and randomized Beacon to complete the trusted configuration. With the POK algorithm, it implements the key pairs that can be later verified, and establish binding with the previous Player’s result. The trusted setting participants number can increase, and the Players only need to follow the order to participate in the computation. Coordinator will verify the results after receiving from one Player, then send it to the next Player.